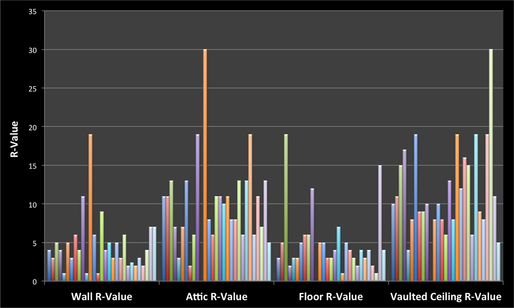

R-Value Quiz Questions - (click to enlarge)

R-Value Quiz Questions - (click to enlarge) There are many factors that impact the accuracy of energy savings estimates; this post is focused primarily on software in general, and input accuracy in particular. A software tool’s "accuracy" breaks down to a combination of the validity of the software’s approach and algorithms, combined with the quality of data inputs. For a software product to deliver consistent and accurate results, there needs be both a valid predictive model and reliable data.

If the values assigned to a building’s components are incorrect, then the predictions of savings for any given measure or combination of measure for that house are likely to be off. This is true even if the model has been calibrated using actual energy usage data.

For example, if a home energy auditor were to under-estimate the r-value (measure of energy resistance) of an existing insulated attic, then an improvement made to that attic would show a disproportionate level of savings. This input error will lead to incorrect expectations being set with customers as well as producing fewer public benefits than promised via utility efficiency portfolios that are approved by regulators and funded by ratepayers. For a program like Energy Upgrade California, where rebates are based on percentage reduction (similar to the Homes Act, and 25E in the US Congress), this issue is particularly pronounced and may results in incorrect rebate payments being made to homeowners.

Recent analysis on the Energy Upgrade California program has returned some surprising results related to contractor performance. While there was a high level of overall variance and an over-estimation of savings on average for the program, when the data was broken down by contractor, realization rates (billing analysis vs. predicted savings) by contractor was tightly clustered, with almost all contractor values overlapping when confidence interval was taken into effect. There was little apparent difference between contractors in the program.

The analysis tells us the the model being used in CA has a built in propensity to over predict baseline and therefor savings, however it also tells us that across all contractors, the way the model is used is consistant. Even though much as been made about how hard the current CA software tool is to use, it appears from analyzing over 1000 homes, including modeling data and actual bills, that two contractors using the same tool on the same set of houses, are likely to have similar results based on the fact that assumptions such as r-values of assemblies are consistent.

Like most software tools, the software used in CA (EnergyPro 5) uses look up tables that translates assembly attributes an auditor sees in a home (e.g. 2x4 wall, no insulation, stucco exterior) into an r-value from a library of consistent values. The auditor or contractor inputs what they are looking at - which in some cases also include attributes like quality - and an r-value is pulled. If those assumptions are wrong, then the overall model may have errors, but it would appear that the CA system is managing one of the major sources of error, which is a lack of consistancy in input value.

The question is, how important is it to standardize input values such as the r-value of wall assembly, and can contractors or auditor reliably or accurately estimate these values without the aid of look-up tables?

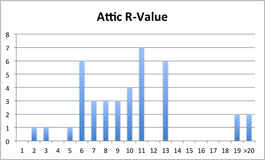

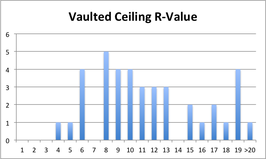

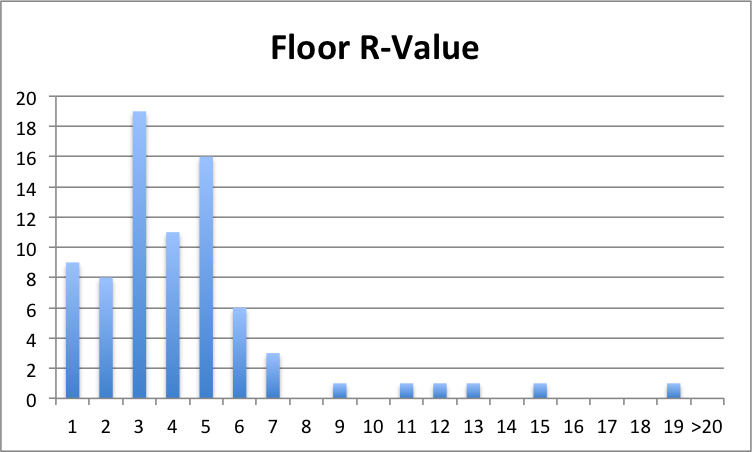

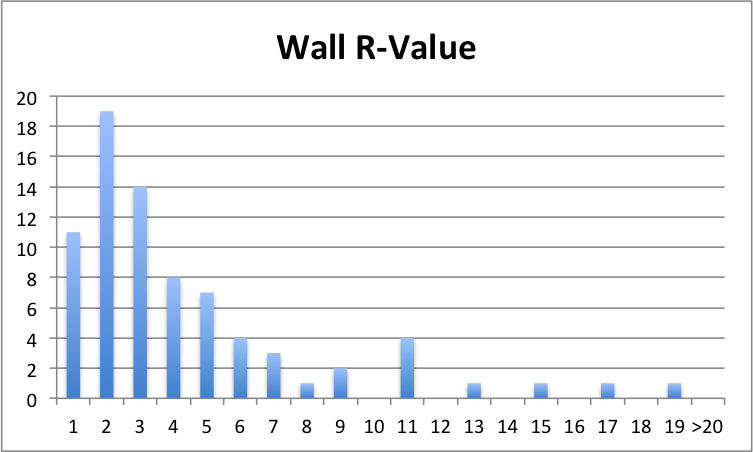

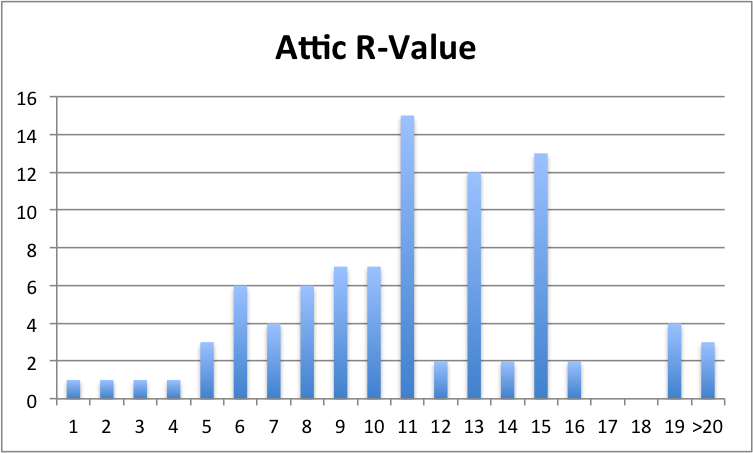

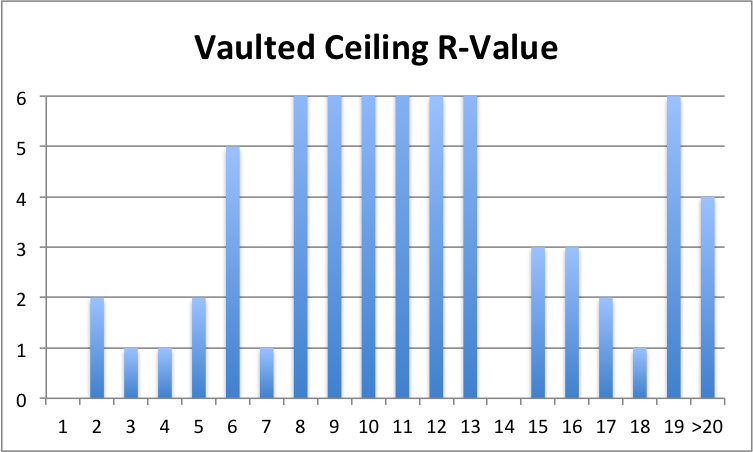

In an effort to get some data to attempt to answer these two question, a simple poll was created (SEE POLL), that shows participants a picture of an assembly (wall, attic, floor, IR vault), and a simple description of construction and insulation details in said assembly. Poll takers were then asked to give their estimate as to r-value for each assembly, much like they might have to do in the field.

The goal of this poll is to find out if contractors or auditors looking at the same wall, floor, attic, and vault come up with:

- A effective assembly r-value

- A consistent set of values

The poll was announced on the BPI / RESNET Group on Linkedin, as well as sent to a list-serve of about 190 home performance contractors in CA. This group is primarily comprised of fairly experienced auditors and contractors, and while this poll is not scientific, the results speak for themselves and should give us pause if we are relying on field estimates for r-values as the basis for a prediction. Answers were received over a 3 day period with 40 participants.

Question:

If you give 40 different auditors the same common four building assemblies (photos and descriptions), and ask them to give you what they think is the most correct r-value, will the results be consistent?

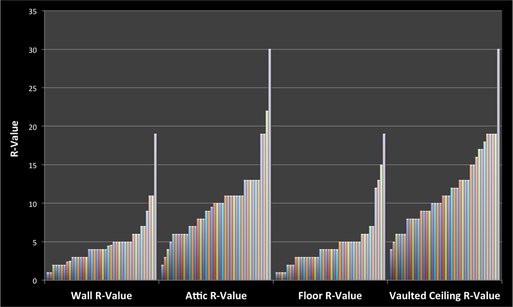

To make it a bit easier to read, the graph below is reorganize and grouped by predicted r-value. Now one can see that there are some values where there is more agreement than others, but it is a pretty broad standard deviation - not a lot of clear agreement, and a lot of outlying values.

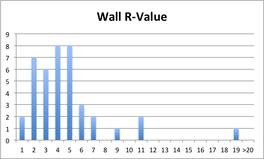

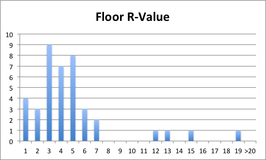

Based on this initial data, It is clear that there is substantial variance on auditor's estimates of r-value. While a more detailed analysis would be welcome, these results are extremely pronounced and argue for software tools to provide r-values for assemblies rather than leave an empty box for the contractor or auditor to put in the r-value.

Until there is model for home performance where actual performance is tracked and used to calibrate software results, programs that rely on software predictions to drive consumer decision-making or rate payer funds, should require that input values are consistent, regardless of auditor, and based on imbedded assumptions. It may be fine to allow contractors to over-ride values, but this should be the exception and trigger QA, or should be through established methods like a quality rating that uniformly makes changes to the underlying assumption.

Additionally, if only an r-value is recorded without some sort of description of the assembly construction, it may be difficult or even impossible QA these inputs after the fact, as there may not be any way to verify the attributes of the assembly being estimated.

Vetting a software vendors model that does not include standardized assumptions through an engineering review that is conducted assuming reasonable or pre-established r-values is simply not sufficient - when in the real world it is now apparent how much variance we can expect input into those fields. This analysis strongly suggests that input quality in the field if not constrained will vary substantially from those used in an upfront review, and therefor render conclusions reached without factoring in quality of inputs in the field invalid.

For those tools who currently require an auditor to directly input r-values, vendors should be required to develop a set of look up tables that generate a value based on a description of the assembly. There are multiple places a vendor could go to get established assumption values (NREL, ACCA Manual J, etc.), and the fix could be as easy as adding a drop down menu to the software - which should also have little impact on an auditor in the field, and may in fact reduce the time it take to complete the model's inputs.

Recommendation: All software used in programs to predict savings should use standard assumptions based on assembly characteristics in order to improve the consistency and quality of input values.

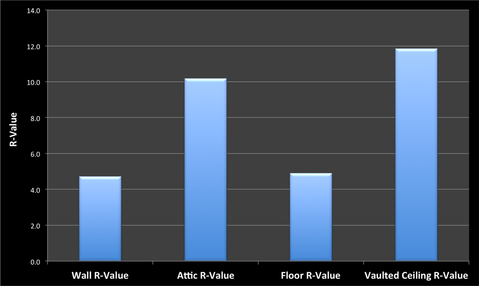

On that note, I wanted to leave everyone with something to discuss. Given the fact that in this exercise, there really is no "right" answer, here is a cut of how we did on average for each assembly type.

Question: How is our collective intelligence based on these average predicted values?

RSS Feed

RSS Feed