Randomized trials are the gold standard for many economists, and on small scale in a clinical and research environment, trials can certainly provide a high level assurance of efficacy. However, it’s simply not feasible or economical to measure or assess energy efficiency programs at scale using Randomized Control Trials (RCT) and Random Encouragement Designs (RED).

This is not to say that current EM&V methods shouldn't be improved and standardized. In fact, much of my professional life has been devoted to this effort. However, while perfect accuracy is a lofty goal, the attempt to achieve it must also support the market need for transparency, predictability, and cost efficiency.

Where possible, small-scale studies using randomized encouragement design and randomized control trials should absolutely be applied to ground truth transparent quasi-experimental results and quantify uncertainty. However, these clinical approaches are fundamentally inappropriate beyond a research setting.

Randomized control trials typically divide participants into two groups, with one group receiving the prescribed "treatment" and the other getting nothing or a placebo. Doing this with self-selecting efficiency programs would require private companies to not treat a subset of their expensive-to-acquire customers, which is simply not feasible. If a customer wants a new furnace you can't stop them, nor should you.

An alternative to the RCT is a randomized encouragement design (RED). In this approach, all customers are treated, but a defined subset is provided additional encouragement to participate (imagine a salesperson who gets an extra $250 for making a sale off the list – but all customers all get the same deal). With a large enough sample and high enough participation, it is possible to calculate savings that are additional in the encouraged group.

At face value, REDs are technically possible in energy efficiency. However, in practice there are a host of barriers that call its feasibility and efficacy into question.

Unlike a drug test or a top down weatherization program, the market-based EE landscape has a huge diversity of business models, customers, and technologies being deployed. You can’t measure once and extrapolate a given finding into the heterogeneous world of efficiency programs and business models.

Sending a strong enough signal to drive the amount of additional adoption required for the statistical power necessary to draw conclusions will be incredibly difficult and expensive. Many channels manage hundreds or even thousands of independent contractors, who in turn have many additional thousands of salespeople. Motivating both contractors and salespeople to provide this extra encouragement would be both a logistical challenge, and costly to accomplish.

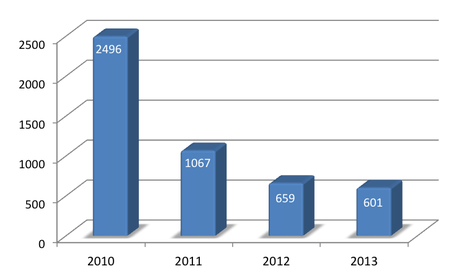

Initial experience in running a RED in energy efficiency was on fully subsidized low income weatherization in a small market. Unlike highly diverse, much larger private markets, weatherization is centrally controlled, has a very defined customer, and one can apply a top down engagement strategy. In this first RED test, door knockers were paid specifically to target just the “encouragement” customers and highly motivated to get them to sign up for a free product. This very intensive and expensive approach still only resulted in a 6% encouragement rate, which was only borderline significant and insufficient to provide confidence in savings.

In the energy efficiency marketplace, customers come from all corners (but mostly from referrals) and everyone is already extremely motivated to sell. Contractors spend thousands of dollars acquiring each customer, and sales people are often offered commissions as high as 10%. So the question is, how much extra money would it take to give them enough additional encouragement to see a statically significant jump in close rate when their very livelihood requires they sell every customer all the time? The next question is, where would this money come from to encourage this to happen?

Unless the “encouragement” group adopts at significantly higher rates than the overall population, REDs simply won't deliver statistically significant results.

Almost as a side note. These funds would, for tax, logistical, and business reasons almost certainly have to flow through contractors to their employees, not as taxable income directly to employees from a program or government body.

So this brings up an issue of fungibility. If there is a new revenue source, and contractors are competing for customers, it is going to be next to impossible to ensure that contractors don’t improve the customer's offer to win deals. If the encouraged customer gets a different offer than those in the control group, this would undermine the validity of the trial.

But wait, there’s more… and this is the real issue.

In our pursuit of the perfect measure of savings, we forgot the most important part, which is where the money comes from to get to scale.

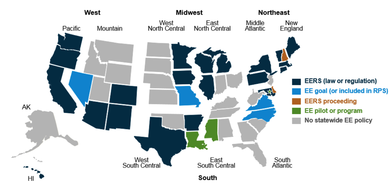

Ratepayer surcharge based programs only deliver about $8 billion a year into the market, resulting in only $16B (give or take) of total investment. While this sounds like a lot, it pales in comparison to the technical potential of efficiency investments at a lower marginal cost than alternative distributed energy resources. For efficiency to scale we must move beyond current consumer financing options and instead finance the upfront costs through project finance -- infrastructure investors who finance power plants, rather than credit cards.

In order to attract this infrastructure investment, we need manageable risk, not uncertainty. Relying on randomized studies that can’t be replicated by market participants and can’t be quantified until well into a contract period, creates uncertainty that makes private investment more costly or potentially impossible. When you can’t estimate what a finding will be until after the fact, you turn investment into a guessing game.

Allowing the measurement tail to wag the efficiency dog creates an existential dilemma where perfect measurement could mean we can no longer manage risk and attract the capital we need to scale efficiency -- meaning that more power will need to be generated, with the cost of this additional generation recovered thru rates.

However there is good news. There have been massive advances in measurement that are apparently not well understood.

At Open Energy Efficiency, we are now metering energy efficiency, which means we are automatically calculating monthly, daily, and hourly resource curves across utility portfolios, and for participants in the market in near-realtime directly from normalized smart meter data. This open source platform (based on a process in California called CalTRACK) is helping some of the largest utility programs and energy efficiency companies in the United States track their savings, and conduct analytics in real-time for the first time ever.

We combine that with a distributed cloud based platform that can do similar realtime normalizations for the full population usage and meta data. Entire states are now implementing the ability to track and analyze meter data at both a project and population level.

Open source analytics tools allow regulators, utilities, and measurement professionals to filter the population by attributes such as location, sector, climate zone, and load shape (or any other meta data at our disposal). These results then generate quasi-control groups that can be tested based on historical data, allowing experts to analyze their options and negotiate a reasonable control group that fairly represents the naturally occurring population level exogenous effects.

The parameters of that quasi-control can be locked in prior to starting a given program or procurement. Historical trends can be published, and the exogenous effect can be tracked on a longitudinal basis. Now we can not only track net impact, but also we can manage the risk.

Are these quasi-controls as statically powerful as is theoretically possible in a properly conducted RCT or a RED? The answer is clearly no. However, early REDs that have been conducted in efficiency do not differ substantially from the findings of current EM&V methods.

Random encouragement trials are another tool in the box to tune and improve the quasi-experimental approach – but should only be used as research in cases where it is likely they can encourage enough adoption to be valid while still being economically feasible to conduct. The results should not replace current methods, but instead be used to improve them.

While both energy efficiency and EM&V need disruption and innovation, market practitioners in energy efficiency have different constraints and benefits than those focused on pure research and energy outcomes. I hope that we can refocus our discussions with the academic economics community to help research and address the much more pressing questions associated with the valuing of time and locational efficiency impacts, accurate calculation of avoided carbon, and the development of open source, transparent and replicable approaches to measurement that can support markets and investment.

RSS Feed

RSS Feed